Generating sudokus for fun and no profit

Once upon a time, I decided to create a complete Sudoku application as my grandma wanted to play some Sudokus on her computer, and I wasn't satisfied with the free offers available. The project went on for some years and finally led to sudoku.tn1ck.com - a free and open source Sudoku app without any tracking. While working on it, I went down the rabbit hole of generating Sudokus of a specified "human perceived" difficulty and accidentally created a quite thorough analysis of it.

Creating a Sudoku solver

First things first, to generate a Sudoku, we first have to solve one. The solver plays an integral part in the generation part, as we will use the iterations it needed to solve a Sudoku to measure the difficulty.

We will explore multiple algorithms and benchmark them against each other in how well we can use them to measure the difficulty of a Sudoku. We start with the most basic brute force algorithm and end up with the final one, based on seeing the Sudoku as a Constraint Satisfaction Problem (short CSP). We use a depth-first search (short DFS) for all our different strategies here. We abstract this by the following function. We make it Sudoku specific instead of completely generic for ease of use.

- Domain - A domain is the set of possible values for a variable. For a Sudoku, this is the numbers 1 - 9.

- CSP - A constraint satisfaction problem (CSP) is a problem defined by a set of variables, a set of domains, and a set of constraints. The goal is to assign a value to each variable such that the constraints are satisfied. For a Sudoku, the variables are the cells, the domains are the numbers 1 - 9, and the constraints are that every row, column, and square has to have unique numbers.

- Arc consistency - A variable is arc-consistent with another variable if every value in the domain of the first variable has a possible value in the domain of the second variable that satisfies the constraint between the two variables.

In the Sudoku example, if we have two cells in the same row, one with the domain [1, 2, 3] and the other with the domain [2], this is not arc consistent as 2 is in the domain of the first cell. If we remove the 2 from the domain of the first cell, it becomes arc consistent. - AC3 - The AC3 algorithm is an algorithm to create arc consistency. The main difference from the complete naive way to achieve arc consistency is that we do not loop over all constraints again when a domain of a variable changes, but only the relevant variables that have a constraint with it (in Sudoku the cells in the same row/column/square).

For every cell in the Sudoku, we keep track of its possible values. We reduce the possible values for every cell by checking the Sudoku constraints e.g. remove the numbers that are already in the same row/column/square. We do this as long until no domain is changing anymore. This "reduction of domains using the constraints" is arc consistency.

For very simple Sudokus, this is already enough to solve one (see the applet below), for harder ones, we are left with multiple options for every unfilled cell. This means we have to employ a search again. We use then the "Minimum remaining value" strategy again to select the cell with the least options and create new versions of the Sudoku with that cell filled with the possible values. This is called "domain splitting" in fancy computer science terms. We again count the number of iterations needed to solve the Sudoku.

The applet shows the domains of the applied AC3 algorithm in unfilled cells. If a Sudoku cannot be solved, one domain will become empty, which is shown as red, then the algorithm will backtrack.

Rating the difficulty of Sudokus

The main problem that one faces when generating a Sudoku is to assign the difficulty rating for a human solver. As we don’t want to manually verify every Sudoku we generate, we need an automatic way for us to group a newly generated Sudoku according to its difficulty. All our Sudoku solvers yield an iteration count, which we will use as our cost function. I'm relying here on the paper "Rating and generating Sudoku puzzles based on constraint satisfaction problems." by Fatemi, Bahare, Seyed Mehran Kazemi, and Nazanin Mehrasa.

In the paper, they download Sudokus of each difficulty section from websudoku.com, solve them by students volunteers, and then run their algorithm on it. They then took the average of each category and so they got an iteration-to-difficulty mapping.

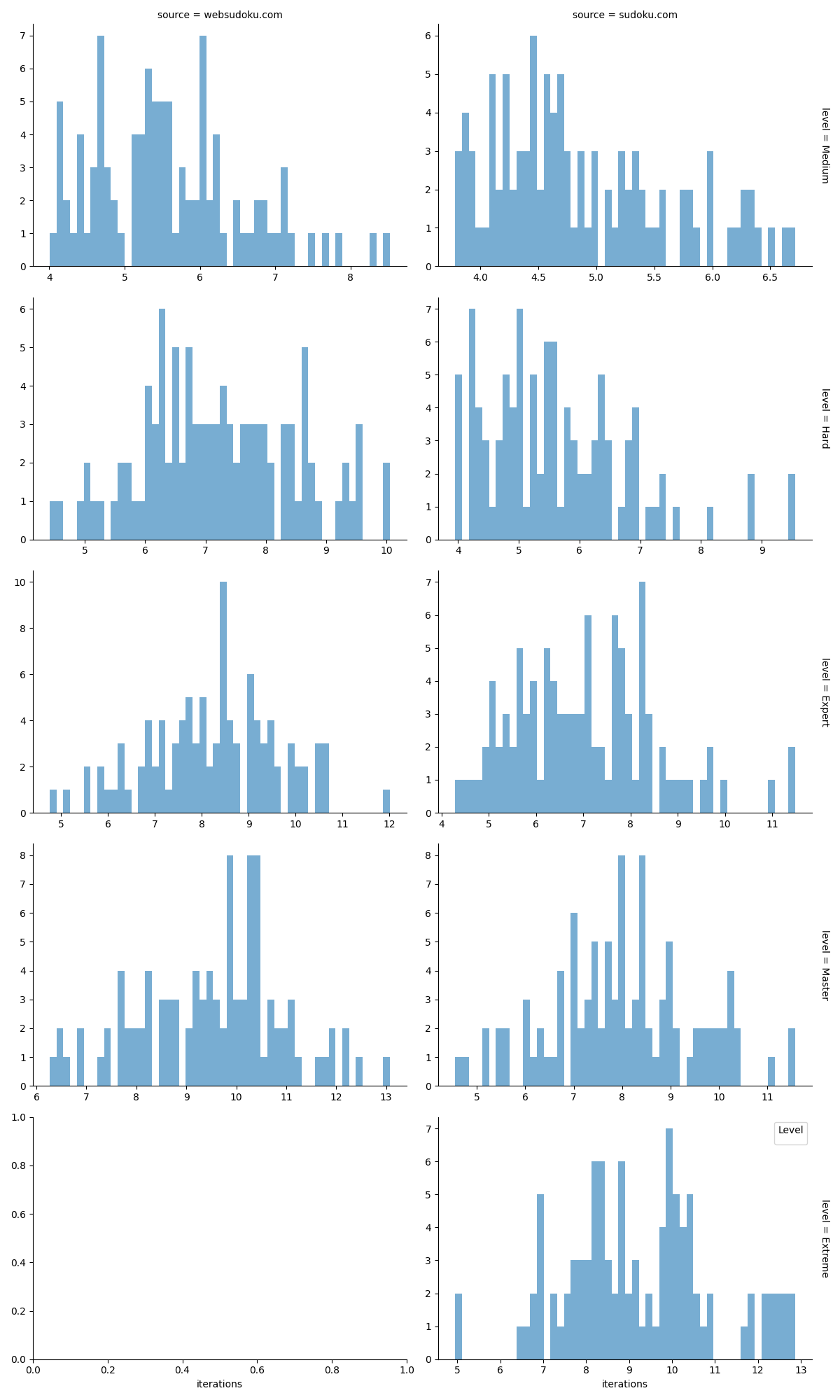

We will do basically the same, but actually publish the data and also try out how the "lesser" strategies work here for rating the difficulty. I fetched 100 Sudokus from websudoku.com (easy, medium, hard, evil) for each of its difficulty classes as well as from sudoku.com (easy, medium, hard, expert, master, extreme).

How well do the solvers measure human difficulty?

I'm not a data scientist, so take this analysis with a grain of salt, and I'm happy for any comments/proposals on how to improve it. As I don't have comments here yet, you can open an issue at the GitHub repository of this analysis instead.

This is the raw data on how many iterations each solver took to solve the Sudokus. You can also execute it yourself here or look at the source at GitHub. I skipped the most simple brute force, as it would take ages to compute even medium difficult Sudokus.

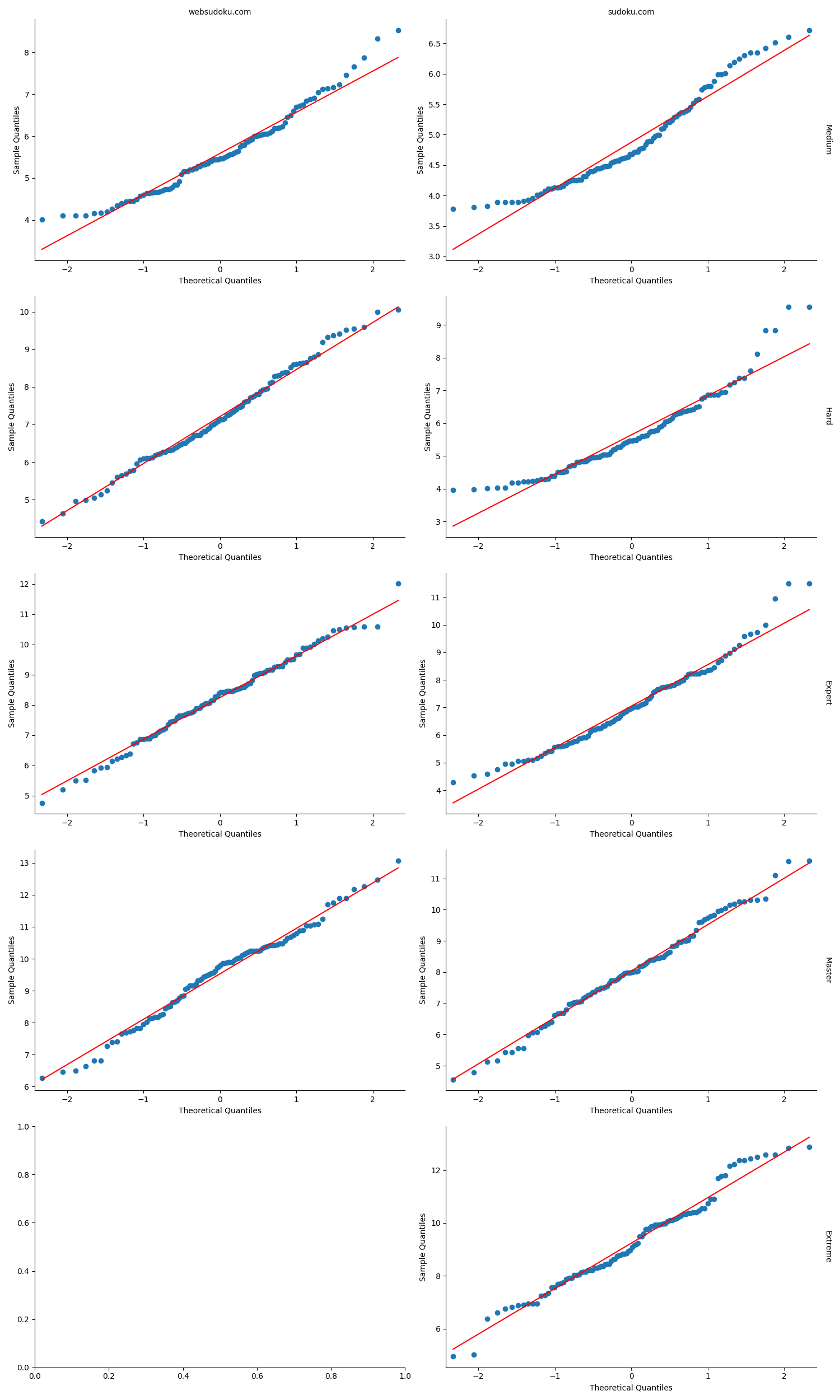

For all the charts, I used the logarithm on the iterations as especially the qq plot made it very obvious that the iterations count is exponential to the difficulty. Which is not surprising as solving Sudokus is famously NP hard. Any currently known algorithms to solve an NP-hard problem take exponential time.

Both the minimum remaining value and arc consistency algorithm look the same, but only for the more difficult levels as for the easy ones, they always need the same number of iterations.

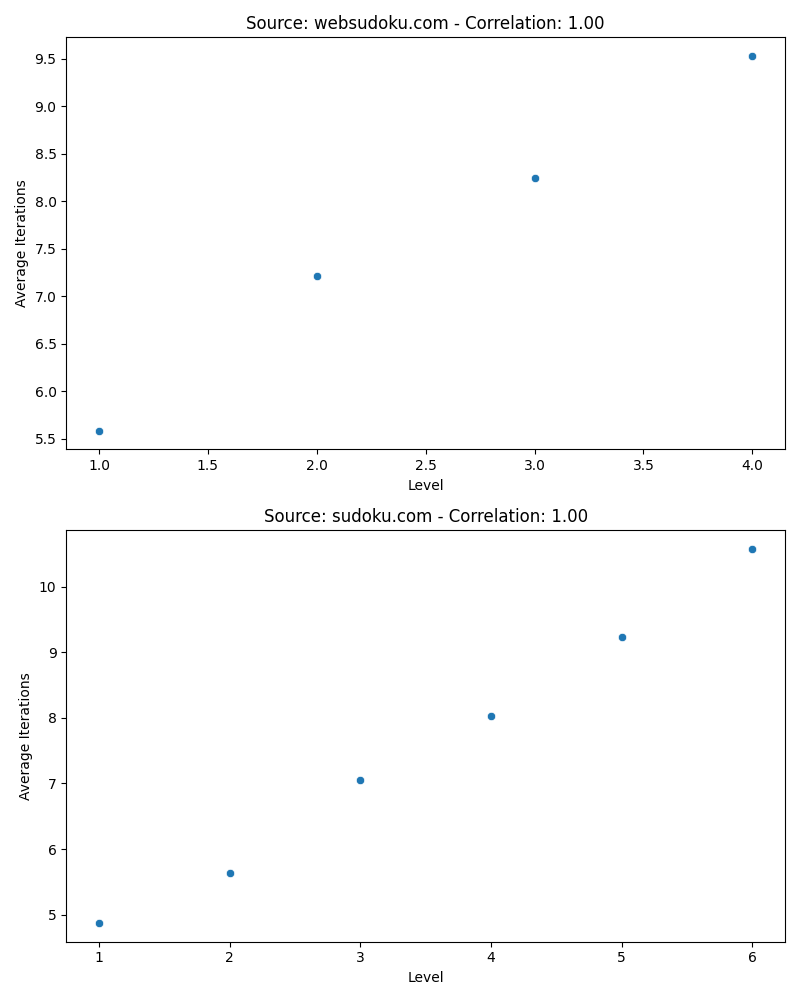

As we can see, they all correlate almost perfectly with the brute force having a perfect 1.0 correlation, making it highly likely that the websites use the iteration count as well for their level determination - and this makes the whole analysis problematic, as we still don't know if this is actually a good difficulty indicator for how a human perceives the difficulty. Actually solving Sudokus by a human and rating them by the time to get a ground truth is left as an exercise for the reader (Sorry I'm not paid for this.)

- Start with an empty grid and fill it with random numbers until it is a valid (and unique) Sudoku. We backtrack when the added number will lead to a non-solvable Sudoku. Note: The uniqueness constraint comes automatically as we continue to fill the Sudoku with numbers. Instead of stopping when it is unique, we could also stop when it is fully filled, but that wouldn't be helpful as we would have to delete numbers again in the next step.

- To generate a Sudoku of a wanted difficulty, we either remove numbers or add numbers until the reached difficulty is achieved.

- If we cannot delete any more numbers without making it non-unique, meaning we reached max difficulty, but the difficulty is below the requested one, start at 1 again. Note: We could also backtrack or add numbers again, but for my personal use, I found it better to save the Sudoku with the maximum achieved difficulty and start over, but by adding and removing numbers, one could theoretically reach the requested difficulty.

- If the call count is close to the requested value, return the Sudoku.

As point 3 points out, generating very difficult Sudokus can take quite some time as any generation method will struggle with the uniqueness constraint and has to randomly alter the Sudoku generation steps. Here is an applet for you to interactively run the Sudoku. The code is not crazy optimized, and we do have to do some heavy calculations, so be wary that your browser might freeze for a bit if you click solve.

- Find a sudoku that is solvable and unique:

- Decrease / increase difficulty as much as possible.Disabled: Sudoku is not unique and solvable yet.

Generation algorithm as described by the paper

As mentioned above, the algorithm described in the paper has some issues, which in effect make it very slow albeit still valid. Here is how they describe the algorithm:

"We use hill climbing to generate new puzzles having a call count close to the call count we need. In this method, first of all, we generate an initial puzzle with some random numbers inside it and calculate its cost function. Then in each iteration, we randomly change one element of this solution by adding, deleting, or changing a single number and calculate the cost function again.

After this, we check the new value of the cost function for this new puzzle and compare it to the previous one. If the cost is reduced, we accept the second puzzle as the new solution and otherwise, we undo the change. We do this process until meeting the stopping criterion.

The cost function for a given puzzle is infinity for puzzles with none or more than one solution. For other puzzles, the cost is the absolute value of the current puzzle’s call count minus the average call count of the given difficulty level. For example, if we want to generate an easy puzzle and we want to consider the values demonstrated in Table I, then the cost function for a puzzle having a unique solution is the absolute value of its call count minus 6.234043.

We stop the algorithm when we have puzzles with costs close to zero. Depending on the level of accuracy we need and the number of difficulty levels we have, we can define the closeness of the cost function to zero."

When I first read it, I thought "we generate an initial puzzle with some random numbers inside it" would mean that I could literally start with a Sudoku grid and put random numbers in it.

But one needs an already valid Sudoku as else the hill climbing will not work as the cost function for an invalid configuration should return infinite. While theoretically it still works, it takes far too long to stumble upon a valid configuration like this.

I’m not entirely sure if they meant to start with a valid Sudoku, but "initial puzzle with some random numbers" does not sound like it. Furthermore, "just adding, deleting, or changing a single number" is not efficient, as again, this can lead to an invalid configuration quickly, albeit the hill climbing will take care of that if you were in a valid configuration before.

I find my algorithm to be more efficient and elegant as it is guided by the Sudoku's constraints, namely we first create a solvable and unique Sudoku, making the hill climbing afterwards easier as our cost function will not return infinity. Their algorithm will spend most of their time trying to stumble upon a valid Sudoku.